2018F: Sentiment Analysis

By: Matthew Fam

Sentiment Analysis (also known as opinion mining or emotion AI) is an application of artificial intelligence that aims to recognize subjective, emotional content of human language. It exists on the intersection of computer science and computational linguistics and can applied to speech or text. The main use of sentiment analysis is to analyze text and determine the emotion that it exhibits (sadness, excitement, anger, etc.) or the polarity of its opinion (very positive, positive, neutral, negative, or very negative).

Background

Sentiment analysis is, in itself, an application of natural language processing (NLP), and text mining [text mining wiki] (often called text analysis), so it is important to know about both before diving into a specific task it can be used for.

Text Mining

Text mining is the process of extracting data from large collections of unstructured, qualitative written data. [1] Text mining involves information extraction (IE), information retrieval (IR), categorization, and clustering to establish connections between different data. It is very heavily founded on statistics and is a form of data mining.

Natural Language Processing (NLP)

Natural language processing is a field concerned with using computers to process, analyze, and in some cases manipulate human language on a large scale. It relates to text mining, but goes further, analyzing not just words, but structure. While text mining is shallow in that it turns language into simple sets of data located within the sentences or words themselves, NLP often looks for more complex information, analyzing the significance behind the language rather than the language itself. [2] Still, there are situations where it is difficult to distinguish text mining from NLP and their classification becomes unclear. The goal of NLP is to allow computers to interact with humans in their own language. Its applications are incredibly wide, including content categorization, topic discovery, contextual extraction, speech-to-text and text-to-speech conversion, document summarization, and machine translation. [3] as well as speech recognition, natural language understanding, and natural language generation. As a result, it is useful in many fields from healthcare to marketing.

History

It is widely accepted that the field of natural language processing took off in the 1950s. In 1950, Alan Turing published, “Computing Machinery and Intelligence.” In the paper, Turing proposed measuring a computer’s intelligence by having a human hold a conversation with a computer and another human using text only and seeing whether the human was able to tell which was the computer and which was the human. [4] However, this task was way ahead of its time. Most of the work which began the movement of natural language processing pertained to machine translation. In 1954, the IBM-Georgetown Demonstration made a huge stride in presenting automatic translation from Russian to English. The complexities of language proved to be a huge obstacle which required linguistics to solve. In 1956, Chomsky analyzed language grammars leading to the creation of Backus-Naur Form (BNF) notation, a way of simplifying and universalizing grammar within set rules of syntactic structure. [5] At the time advancement was limited by the novelty of the field as well as the lack of tools, whether high-level programming languages or efficient computers. Simple approaches like the machine translation method established at the IBM-Georgetown Demonstration were found to be lacking when translating homonyms or metaphors [5]. Still much progress was made in compiling lexicons and grammars. After a large amount of funding, the 1966 ALPAC Report brought the field to a halt as funding was severely cut due to progress being deemed too slow.[6]

The second wave of natural language processing placed less of a focus on linguistics and more on computation and artificial intelligence. This was very well represented by Roger Schank’s conceptual dependency work, which focused on turning input sentences into a larger model of meaning based on context, content, and structure as a whole rather than focusing on specifics. [6] Still results proved much worse than expected.

As understanding of the sheer difficulty of the task at hand spread, a third phase began centered around grammar and logic as well as discourse. Advancement of programming allowed work to progress in new ways as linguistics came back into the picture in the formation of new ways of representing grammars including the computational grammar theory and discourse representation theory.[6] This proved useful in spite of the fact that universal grammars had been proven inadequate for representing natural languages.[5] In essence, many of the resource limitations present before were no longer applicable, so many of the previous ideas were able to be pushed further.

During the 1990s, the most recent phase of development began wherein the foundation of natural language processing was completely changed. Previously NLP had relied on hand-written rules, defined programs, lexicons, and grammars that left little room for variability and thus struggled with the diversity of language as well as scale. New statistical approaches led to statistical language processing which incorporated probabilistic data along with machine learning to allow for much larger scale and better results using fewer and broader rules.[5] For the first time, in this phase, practical applications were commonly seen and continue to be created, many of which even involve speech recognition.

Text mining has a much shorter and less rich history. It developed, in the late 1990s, quite a bit after natural language processing with researchers using text as data.[7] At first it was used by intelligence analysts and biomedical researchers to search for patterns, for instance, links between certain proteins and caner within biomedical literature.[8] Now, text mining has expanded to other applications including fraud detection. It has also incorporated some natural language processing techniques. In the case of sentiment analysis, both are used together.

Features

An integral part of analyzing language is being able to convert text to a feature vector that can be processed in a machine. There are many approaches for feature engineering which have different purposes and achieve different results. Here are the most common ones:

Term Presence vs Term Frequency

Throughout the history of text mining, specifically information retrieval, emphasis has been placed on term frequency, with each word within a text taking on a specific value and being weighted based on frequency. [9] However, a famous study by Pang Lee et al. discovered that term presence alone (given a value of 1 if it occurs and 0 if not) resulted in better performance when analyzing polarity classification than assigning repeated words higher values (Pang Lee et al 2002). Valuing term frequency provided a better prediction of topic as opposed to sentiment.[9] In relation to this, hapax legomena, the presence of rarer words proved more indicative of sentiment than more commonly used vocabulary, probably due to a relationship between emphasis and subjectivity.[10]

Term Position

Words carry different significance depending on their location within texts, especially longer texts. Although it applies differently in sentiment analysis, IR uses this to put more value on words present in titles or subtitles.[10] In sentiment analysis, words within the beginning and end of a text are sometimes given more weightage than those within the body of the text.[10]

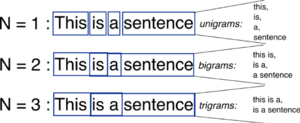

N-gram

An n-gram is a group of n words located adjacent to each other. N-grams are typically used within natural language processing to capture some form of context. However, their use in sentiment analysis is not well understood. According to one study, (Panget al, 235) unigrams were shown to be better than bigrams at classifying movies by sentiment polarity while another study (Dave et al, 69) found that bigrams and trigrams have better results in some cases when classifying product review polarity.

Parts of Speech

Parts of speech are tagged the same way they are in text mining because this proves to be an effective method for word sense disambiguation, determining which definition of a word with multiple meanings is intended.[10] Adjectives have been commonly associated with subjectivity and proven to provide fairly reliable predictions when used alone, but other combinations, mainly adjective-adverb combinations improve accuracy in many situations.[10] The discovery of significance of certain nouns in conveying subjectivity has led to further testing of effectiveness given different parts of speech. [9]

Neural Network Approaches

Deep learning models used in sentimental analysis, and NLP as a whole, require some input features that have been processed beyond just the plain text. Typically, word embedding results, which turn words within a lexicon to vectors of continuous real numbers, are used as these inputs.[12] Word embedding involves the creation of a high-dimensional dense vector space that represents different characteristics of words, often determined by linguistic patterns. Word2Vec has been established as the most common word embedding software, a neural network in itself that uses one of two models: Continuous Bag of Words (CBOW), which predicts a word from its context words and is best for smaller sets of data, and Skip-Gram (SG), which does the opposite, predicting context words based on the word, a process more useful for larger data sets[12]. In both cases, words with similar contexts (similar syntax, patterns are placed nearby in the vector space. Alternatives exist, although uncommon.

Many approaches have been tested for creating neural networks to perform sentimental analysis. These include multilayer perceptrons, auto encoders, and convolutional neural network. Here are the most common approaches:

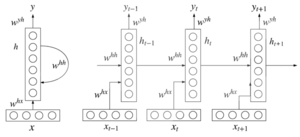

Recurrent Neural Networks (RNN)

Recurrent neural networks (RNNs) are used to account for the temporal aspect of language wherein the order of the words is significant for understanding.[13] Unlike traditional feed-forward networks, RNNs are multi-layered, unsupervised networks with an input, hidden, and output layer (Fig 2).

It is this architecture that allows them to incorporate a temporal aspect or a “memory.” Each word is associated with a time step based on the word’s position within the sentence while each time step is associated with its own hidden vector[13].

This means that as the RNN runs, each output is based on all of the previous computations and their effects on the hidden layer. [12] It also leads to a relatively simple architecture without too many parameters due to the fact that the same task is performed again and with different inputs. In other words the same “physical” synapses are being used with the weights being slightly altered between runs.

This formula gives the calculation used to change the hidden layer between t steps, showing the variable effect of the previous step on the hidden layer as it will be applied to the next step.

This formula gives the probability that the input exhibits a specific sentiment. A softmax function is used to get values from 0 to 1 to represent probability. [12]

However, when dealing with too many time steps, normal RNNs exhibit the vanishing gradient or exploding gradient problem, wherein the change to weights between steps becomes arbitrarily small or large deeming the network useless.[12]

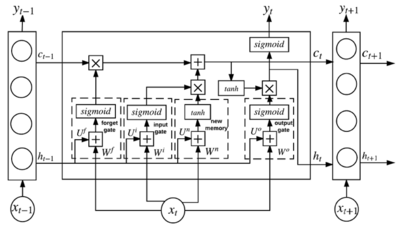

Long Short Term Memory Networks (LSTM)

Long short term memory networks (LSTMs) are RNNs specifically designed for learning long term dependencies. [13][12] It does this by establishing a more efficient process whereby the significance of information is determined so only the relevant “memory” is kept and the rest discarded. While this makes the architecture of the network much more complicated, it simplifies the final completion of the task that the network is asked to complete.

LSTMs have 4 neural network layers working together with a cell state and a hidden state.[12]

For each time step, t, the LSTM uses a sigmoid function called, “forget gate,” to generate a value, 1 (keep) or 0 (discard), which determines whether the information is completely kept or discarded based on the output from the previous hidden layer and the current input.[12]

Next, LSTM uses an “input gate” function (first equation in figure) to determine what new information is stored in the cell state.[12]

A tanh function, which has the same use as a sigmoid function, results in a vector of candidate values to be added to the cell state.[12]

LSTM updates the cell state by combining the input gate and candidate values and incorporating the “forget gate”. [12]

An “output gate” is run which decides which portions of the cell state should be outputted using a sigmoid function as seen.[12]

This equation shows how the output is multiplied by the tanh function (made of 1s and 0s) so only the outputs that were decided on contribute.

Applications

Sentiment analysis is an extremely powerful tool that has applications across many fields including business, marketing, social media, government, and healthcare.

Marketing

The most common current uses of sentiment analysis employ data from social media and relate it to business and marketing. Brands can quickly and conveniently assess trends in brand recognition via tools and services like those offered by Brandwatch, which creates a data dashboard and graphical interface for its customers, making the relevant machine learning techniques accessible to those who do not possess the required technical knowledge or skillset. Not only can companies assess how well-known their brands are, but they can determine their associated reputations at a specific moment in time or across a period in time in a quantifiable manner. Assessing reputation across time allows brands to understand whether their popularity is trending upwards or downwards. Additionally, the effect of specific movements, product releases, scandals, marketing campaigns, or other events can be analyzed by assessing how people’s responses changed around the time of said event. More complex versions of sentimental analysis can also determine the speakers from their posts or whatever kind of data is provided. By determining speakers, companies can assess which kinds of people have which kinds of reactions towards their company. This information can be applied to further target the portion of the population that is most likely to conduct business with them or to try and reach out to the portions of the population that are less responsive. Similarly, advanced forms of sentiment analysis can attribute sentiments to specific features or objects, giving companies a better idea of which parts of certain products may or may not spark positive or negative reactions.

Public Health

In public health, sentiment analysis programs, such as Crimson Hexagon (which has since merged with Brandwatch), have been used to assess different trends across populations in order to improve, discover, and address social determinants of health. For instance, the New York City Department of Health and Mental Hygiene has recently attempted to use sentimental analysis to assess stigma around mental health among the city’s population, sentiments exhibited about homelessness, and even sentiments regarding access to healthcare following Trump’s election and changes to immigration and health laws.

Shortcomings

However, there are many shortcomings when it comes to applying sentiment analysis. Naturally, the more complex the problem that is being addressed is, the worse sentiment analysis becomes as a tool. Sentiment analysis is at its best categorizing sentiments into broad categories that are relatively easy to discern such as positive, negative, and neutral. Still the bigger problem is access to data. The largest, most wealthy, and most useful source of data for people, companies, and corporations using sentiment analysis is social media. However, because of privacy concerns, it is often hard to compile such data. To appeal to users, social media companies attempt to make their data harder to parse and refuse to cooperate with those who want access to data. There are some anomalies however. Twitter seems to be one of the most willing companies to support research as they have coordinated with certain sentiment analysis tools to make their data accessible. Crimson Hexagon is an example of this. It previously also had capabilities of using certain Facebook pages to compile data although Facebook has moved away from this in hopes of keeping their data more locked up.

References

- Jump up ↑ Prato, S. (2013, April 23). What is Text Mining? Retrieved from https://ischool.syr.edu/infospace/2013/04/23/what-is-text-mining/

- Jump up ↑ EDUCBA. (2018, October 05). Text Mining vs Natural Language Processing - Top 5 Comparisons. Retrieved from https://www.educba.com/important-text-mining-vs-natural-language-processing/

- Jump up ↑ SAS. (2018). What is Natural Language Processing? Retrieved from https://www.sas.com/en_us/insights/analytics/what-is-natural-language-processing-nlp.html

- Jump up ↑ Shah, B. (2017, July 12). The Power of Natural Language Processing. Retrieved from https://medium.com/archieai/the-power-of-natural-language-processing-f1ab0aa7d8c4

- ↑ Jump up to: 5.0 5.1 5.2 5.3 Nadkarni, P. M., Ohno-Machado, L., & Chapman, W. W. (2011). Natural language processing: an introduction. “Journal of the American Medical Informatics Association : JAMIA”, 18(5), 544-51.

- ↑ Jump up to: 6.0 6.1 6.2 Jones, K. S. (1994). Natural Language Processing: A Historical Review. “Current Issues in Computational Linguistics: In Honour of Don Walker”, 3-16.

- Jump up ↑ Gray, K., & Gray, C. (2017, March). Text Analytics: A Primer. Retrieved from https://www.kdnuggets.com/2017/03/text-analytics-primer.html

- Jump up ↑ Grimes, S. (2007, October 30). A Brief History of Text Analytics. Retrieved from http://www.b-eye-network.com/view/6311

- ↑ Jump up to: 9.0 9.1 9.2 Pang, B., & Lee, L. (2008). Opinion Mining and Sentiment Analysis. Foundations and Trends in Information Retrieval, 2(1-2), 1-135.

- ↑ Jump up to: 10.0 10.1 10.2 10.3 10.4 Mukherjee, Subhabrata & Bhattacharyya, Pushpak. (2013). Sentiment Analysis: A Literature Survey.

- Jump up ↑ Stackoverflow. (2013, August 12). What exactly is an n Gram? Retrieved from https://stackoverflow.com/questions/18193253/what-exactly-is-an-n-gram

- ↑ Jump up to: 12.00 12.01 12.02 12.03 12.04 12.05 12.06 12.07 12.08 12.09 12.10 12.11 12.12 Zhang, L., Wang, S., & Liu B. (2018, Jan 30). Deep Learning for Sentiment Analysis: A Survey.

- ↑ Jump up to: 13.0 13.1 13.2 13.3 13.4 Deshpande, A. (2017, July 13). Perform sentiment analysis with LSTMs, using TensorFlow. Retrieved from https://www.oreilly.com/learning/perform-sentiment-analysis-with-lstms-using-tensorflow